The past four months have been the craziest time in my life. Depending on your luck, at Google, you immediately got thrown with a project. If you're lucky, you will get a toy project (small project that is supposed to gently bring you up to speed with your team's "ways of life" where

"life" == "coding"); if not, you got your main project immediately. In either case, you have your host to help you out all the time. Apparently, the last part is not true for all interns, some interns have host who have to go on trips to other Google offices, or have a host who is a product manager (who, obviously, is very busy), etc. I'm pretty lucky that my host actually sits next to me (well, most interns have similar arrangements) so I got to ask questions easily.Coding-wise, most hosts will expect a good sense in coding. They expect you to know your object-oriented programming and, if you have not yet learned the language you're going to code in, they expect you to pick it up on the fly. It's not as bad as it sounds. I have completely no experience in C++ before I got into Google and, right now, I would say that I'm confident with my C++ (not expert or anything, but good enough to program almost everything you throw at me). Fortunately in Google, we try to avoid the weird, crazy part of a language. C++, for example, can be used in procedural ways, in object-oriented ways, in functional ways, and as template meta-programming. Functional programming and template meta-programming are pretty much up there in the bizarre world of advanced (or, more accurately, obscure) part of C++ standard and only few people would know how to code in this style. Fortunately, we don't need to know. That's one of the best thing working in Google. We got to easily learn other people's codes because we don't pepper up our code with those obscurities (well, sometime it can't be help, but those should be very, very rare). I also got to brush up my Javascript and HTML a lot. I would say that I've gone from being okay with Javascript, to very good with it.

It's okay if you don't know what's design patterns, or what's C++ templates, or that

for-in in Javascript does not do what you expect when used with arrays. But you're expected to learn them and apply them. It definitely helps a lot to have strong OOP grounding.Most days will be filled with coding, so if coding is your cup of tea, you'll have a good time here. If you hate coding, try to like it before coming here. You definitely need to code here (if you're doing research, less, if you're doing software engineering, a lot).

As interns, we have most of the privileges full-time Googlers have. This is really good! We are not slowed down by inability to access, say, codes that some other Googler wrote. (It seems to be a problem in some other companies, where everything is confidential such that interns need to go through several layers of bureaucracy just to get access to a piece of code, or a wiki page, or a design document, etc). Unfortunately, whatever I worked on at Google remains within Google. Inside, we're very open and talk about mostly everything. You get to learn whatever you want to learn (and can squeeze to your brain) in addition to learning what your host throws at you. You got to discuss things freely with other Googlers (interns included). But once you talk to non-Googlers, everything becomes a black box. I found it really hard to talk about my work to non-Googlers since I have to resort to telling generalities instead of specifics. Say, for example, I can say I'm working on this thing utilizing something like MapReduce, but not exactly MapReduce. Fortunately Google open-sourced quite a bit of things, and published papers on other things. So these stuffs that are already up in the open, we can freely discuss with anyone we know.

If you're lucky enough to get an internship at Google, I can guarantee you one thing, if you have the right attitude, you will learn mountains of stuffs. And they are really, really interesting stuffs. You also got to learn "common sense" stuffs (programming techniques, point of views, knowledge, etc.) that are apparently not very common elsewhere (they are really, really just common sense, nothing extraordinary). Furthermore, we have series of internal talks (dubbed Tech Talks) that any interns can attend. These tech talks range from author's talk, to visiting research scientists' works, to Google tools, to development being done by other teams. They also include some really, really interesting talks that will benefit you outside Google. Unfortunately again, I can't provide you with any sample talks (NDA is one scary thing). On average, I attended 1.5 tech talks a week.

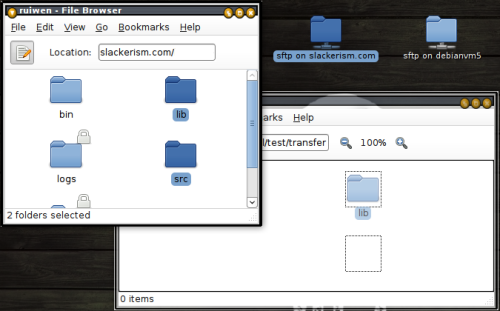

Oh, and did I tell you that you get a workstation to yourself for your work (of course the workstation is not yours, but you're the only one who will be using it when you're there for the internship). You have the same amount of table space and shelf space as anyone else near you too! You might get additional privileges depending on kind of work that you do. Our code repository is pretty awesome (beats cvs or svn by orders of magnitude!). We can submit code to wherever we want (there is a standard procedure to submit code, but it doesn't differentiate full-time Googlers and interns; and the procedure is pretty straightforward).

Now, in addition to things going into your brain, interns also have things going into our stomachs! Yes, like all other Googlers, we got to eat free buffet, gourmet meals in any of the 18+ cafes in Google 3 times a day, 5 days a week (during weekends, only 1 cafe is open to serve lunch and dinner). The food here is awesome. Just imagine spending $25-$50 outside for a meal, 3 times a day, 5 days a week. That's probably the right equivalent value of Google food. The food is very, very healthy! We got access to all the micro-kitchens (pantries that are stocked with tonnes of snacks and cereals). Get ready for 15 pounds increase in your total weight for 12 weeks of internship. Other privileges including gym access (to attempt to shave off those 15 pounds), the infinite pool (water treadmill), the internal mailing lists (ranging from road bikers mailing list to photography to technical stuffs; there is even Singaporeans mailing list there), intern-only events (events full-time Googlers don't even have access too ;)), and the TGIF.

The TGIF is a weekly event (you guessed it, it's held every Friday, Thank God It's Friday!) where the founders, Larry and Sergey, will share significant stuffs that Google as a company did in the past week. The contents of TGIF is mostly confidential (think Google Chrome, just that it's a few weeks before the general public release).

In short, if you're an intern at Google, you can consider yourself a Googler (after the first few weeks; the first few weeks, you're called Noogler rather than Googler; don't ask me what does Noogler stands for, I don't know!). As my internship is 7 months in duration, I'm lucky enough that right now, I'm working as if I'm a full-timer, doing stuffs that any other full-timers do (minus some, but not much).

I would encourage the programmers out there to apply to Google. If you're non-programmer, but really have passion towards programming and are willing to slog through and learn a programming language quickly, you're most welcome to apply. But Google is so difficult to get in, you have to be the smartest students around! Or so you complain. How exactly do you define smartness? Is it CAP 5.0? Is it programming skills? Is it common sense? If you don't try, you never know.

That said, you should know yourself best. So I'll just throw some stereotypes of Google interns. Interns are usually smart (in the sense: throw a complex algorithm at him, and he should figure it out easily, throw a difficult open-ended problems at him, he should figure out how to solve it in not too long a time), we usually code well (emphasize usually, not all interns code that well), we are independent (there are many learning resources available at Google, you are responsible to pick the best learning methods; that includes asking other Googlers ;)), we are fun-loving (most interns have secondary stuffs they always do during weekend, I love manga and biking, another friend of mine plays volleyball everyday, yet another loves water-rafting, etc.), and we don't give up yet know when to give up (think that out yourself).

Check out Google's jobs page if you are interested. You will need to submit your resume online (during the stipulated period), and the recruitment team will do their first screening based on resume. If you passed that stage, you will be assigned a recruiter who will be your friend throughout the entire application and selection period. You'll have 2 phone interviews, both technical interviews. This means five minutes chit-chat, 50 minutes technical questions, 5 minutes for the interviewer to answer your questions. Mind you, only your answers to the technical part matters (they don't care if you are not the most chit-chatty person on earth, or if you don't have any questions for them). You can't really bluff through the technical interview. They'll make sure that you got to answer questions that you don't know how to answer. Thus, preparing by reading past interview questions will not help you, on the other hand, brushing your basic algorithms—the first half or so of this book, a book that I recommend all computer science students should have in their bookshelf—will help you a long way. Be confident and talk! Don't keep mum throughout the interview. The interviewers want to know how you think, not just your final answer. It helps if you're particularly strong in an object-oriented programming language (e.g. Java, or C++, or Python, or Objective-C, or Javascript). If you got the job, you can expect at least 12 weeks of exciting life in one of the Google offices (you got to pick which one, but acceptance to the office is based on internship openings in the particular office(s) you applied to). You apply as software engineer and don't get to choose which team you're gonna be working with though.

The last thing I want to bring up is the fact that a lot of NUS students (or SoC) gives up before they even try! I've heard that NTU consistently manage send a few students to Google for internship, SoC, nay! This year, we only have 1 undergraduate and 1 PhD student interning here! Are SoC students not as good as NTU students? I don't think so! But we do seem to give up when we heard the name "Google".

"No, it's too hard."

"No, it's impossible for me to get in, why apply?" "

"It's just a waste of time."

If that's what in your mind right now, kick them out. Be honest with yourself, if you think you have a 5-10% chance of getting into Google than that's good enough! Again, qualifier: you do need to know your own limit, if you're consistently scoring below 3.0 in CAP (hey, if you're smart, you should at least get 3.5 easily without studying), then maybe you should be rethinking your priority. You should be pulling that CAP up first! After all, if I don't recall wrongly, the jobs page indicate that you need a GPA of 3.0/4 to get in (that is a B/B+ average).

To ease you up, I'm probably one of the unlikeliest person to get into Google, but I did anyway. I just managed to pull my CAP up to 3.83 from 3.66 the previous semester just before I applied to Google, so that saved me a little trouble. (Right now, I've managed to pull it up to 4.02, but hey, that's beside the point! at that time, my CAP was borderline!) Nobody will think of me as someone who's smart (at least not in term of CAP). I do have dreams of working in places like Google, but didn't actually have real hope of getting in with that kind of CAP, but I was lucky to have a professor who kept insisting me to apply. So I did. And here I am. If I can, you can too! (Wow, what a cliche!)

So, I guess, this will be the end of my week of blogging! It's been great posting all sort of weird posts here. Hopefully, next year, someone will tell me that he/she has been accepted for internship at Google. If that's my only achievement through this series of posts, I'm happy enough. (:

Ja ne!

As a final parting gift, here is something pretty cool to watch! (:

- Chris